On Comparing Local and Remote Files with Swift Explorer

Since the version 1.0.4 of Swift Explorer, a function to compare local files or directories against their remote counterparts has been introduced.

Objectives

One of the main benefit brought about by this function is to find files that are obselete (and no longer available locally), and that should be deleted from the remote storage. Conversely, files that have been accidentally removed from the local storage can be easily identified and downloaded from the cloud. Another common utilization is to get a precise control on which files should be updated or uploaded when an already-uploaded directory has become outdated.

Equalizing Directories

The function to compare directories can be accessed either via the main menu StoredObject or via the contextual menu (as depicted in the image below).

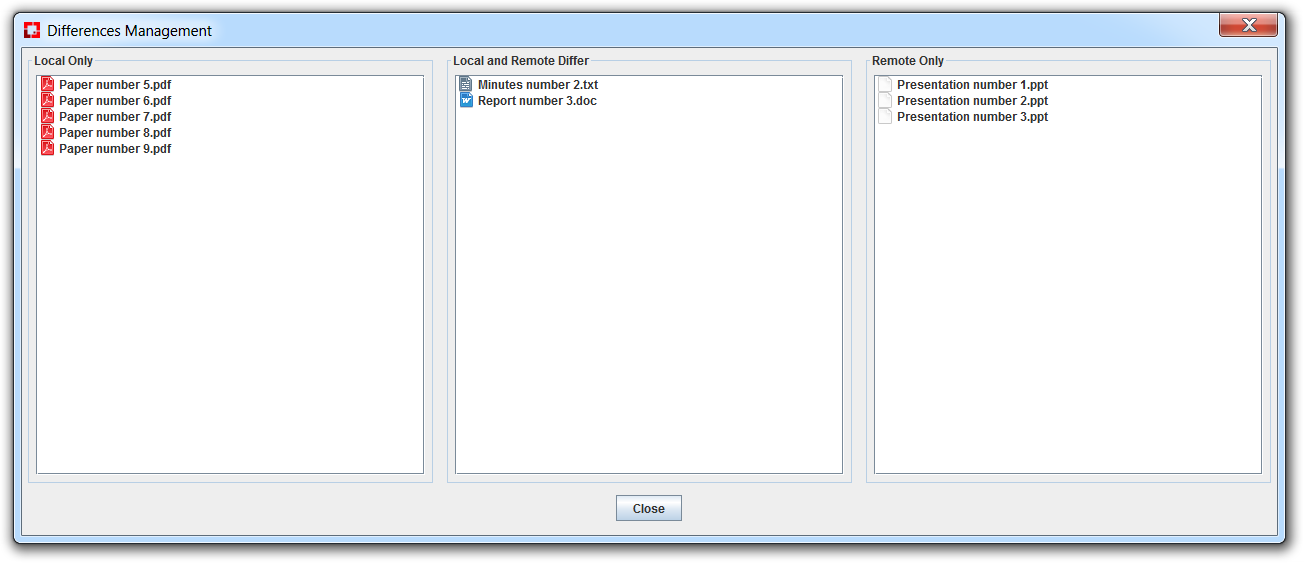

If some discepancies are found, this dialog is opened:

Updating or Uploading Files

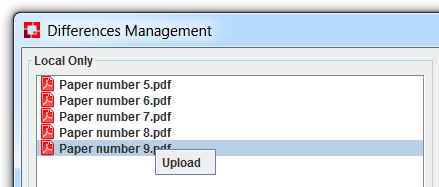

When a file is only present on the local disk, it can be uploaded to the cloud.

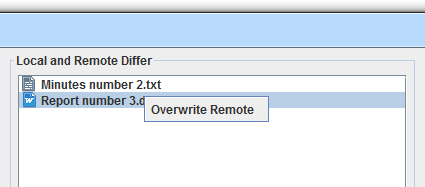

When a file is present on both the local disk and the cloud, but the two versions differ, the Overwrite Remote option will replace the version on the cloud with the local copy.

Note that if a precise control is not required, the directory upload function (Upload Directory under StoredObject) with the option “overwrite existing files” can take care of updating files and uploading missing files in an effortless manner (for further details, we refer the reader to this post Uploading Large Directories Using Swift Explorer).

Removing or Downloading Files

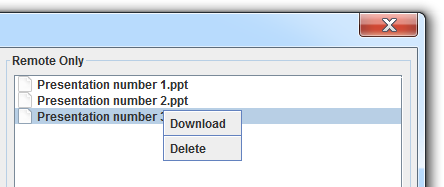

When a file is only present on the remote storage, the available options are either deleting it from the cloud or downloading it to the local storage.

Known Limitations for Version 1.0.4

When dealing with non-segmented files, there are no known issues. It should work whatever the client used to upload the files.

However, when dealing with large files that require segmentation, the version 1.0.4 relies on the specific structure used by Swift Explorer (i.e., the naming convention and the usage of a specific container to store segments). Consequently, if a different client was used to upload a large file, chances are that the comparison function will declare the local and remote versions different, even though they are equal.

This flaw will be fixed in one of the coming releases (and most probably in the next release 1.0.5).

Note About the Performances

It seems reasonable to anticipate some claims about the performances when comparing two ridiculously large folders… We apologize about that. Bear in mind that hashing hundreds of thousands files, possibly large files, requires some time (that may significantly vary depending on the hardware, and especially the I/O capabilities); in addition fecthing hundreds of thousands md5 values from the cloud can be somewhat time-demanding as well (that may significantly vary depending on various factors). Patience appears to be a good remedy here.

Beside the speed issue, getting an out-of-memory error is largely in the realm of the possibilities, for this feature can be memory-demanding (the demand is obviously function of the number of files - we have a linear asymptotic upper bound on the number of files, which is in general not too bad). If RAM sufficiently abounds, you can be more generous to the JVM, and give it some more (using the Jar file, and the option -Xmx<size>).

Current implementation might not be the most optimal, and thus some room for improvement might remain.

Meanwhile, if performances become a severe issue, we recommand, when applicable, a sort of divide-and-conquer approach consisting in comparing sub-directories one-by-one instead of the parent directory.